Research section

Hearing systems

Head of Section: Torsten Dau

Our research is concerned with auditory signal processing and perception, speech communication, audiology, objective measures of the auditory function, computational models of hearing, hearing instrument signal processing and multi-sensory perception.

Our goal is to increase our understanding of the human auditory system and to provide insights that are useful for technical and clinical applications, such as speech recognition systems, hearing aids, cochlear implants as well as hearing diagnostics tools.

Part of our research is carried out at the Centre for Applied Hearing Research (CAHR) in collaboration with the Danish hearing aid industry. More basic hearing research on auditory cognitive neuroscience and computational modeling is conducted in our Centre of Excellence for Hearing and Speech Sciences (CHeSS), in collaboration with the Danish Research Centre for Magnetic Resonance (DRCMR). While our clinically oriented research is conducted at the Copenhagen Hearing and Balance Centre (CHBC) located at Rigshospitalet, which enables us to closely collaborate with the clinical scientists and audiologists.

Our section consists of six research groups with different focus areas. The Auditory Cognitive Neuroscience group (Jens Hjortkjær) studies how the auditory brain represents and computes natural sounds like speech. The Auditory Physics group (Bastian Epp) investigates how acoustic information is processed and represented along the auditory pathway. The Clinical and Technical Audiology group (Abigail Anne Kressner) is focused on cross-disciplinary research that combines knowledge from engineers and clinicians to increase the understanding of hearing impairment and how technology can be used to treat it. The Computational Auditory Modeling group (Torsten Dau) studies how the auditory system codes and represents sound in everyday acoustic environments. The Music and Cochlear Implants group (Jeremy Marozeau) aims to help restore music perception in cochlear implant patients by using several different approaches such as neuroscience, music cognition, auditory modeling, and signal processing. Finally, the Speech Signal Processing group (Tobias May) use digital signal processing and machine learning to analyze and process sound. You can read more about each group and our exciting research projects in the menu on the left hand side.

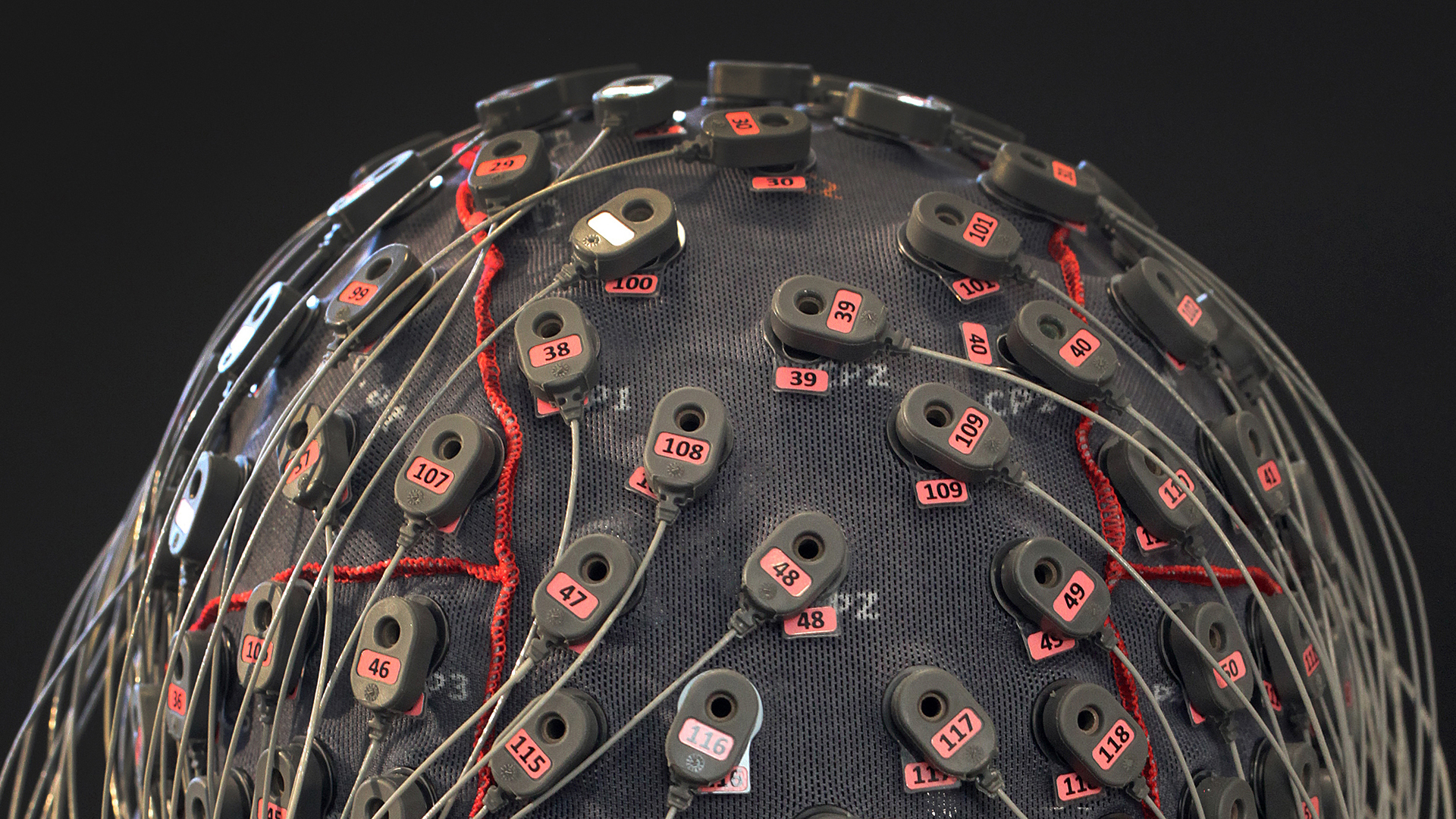

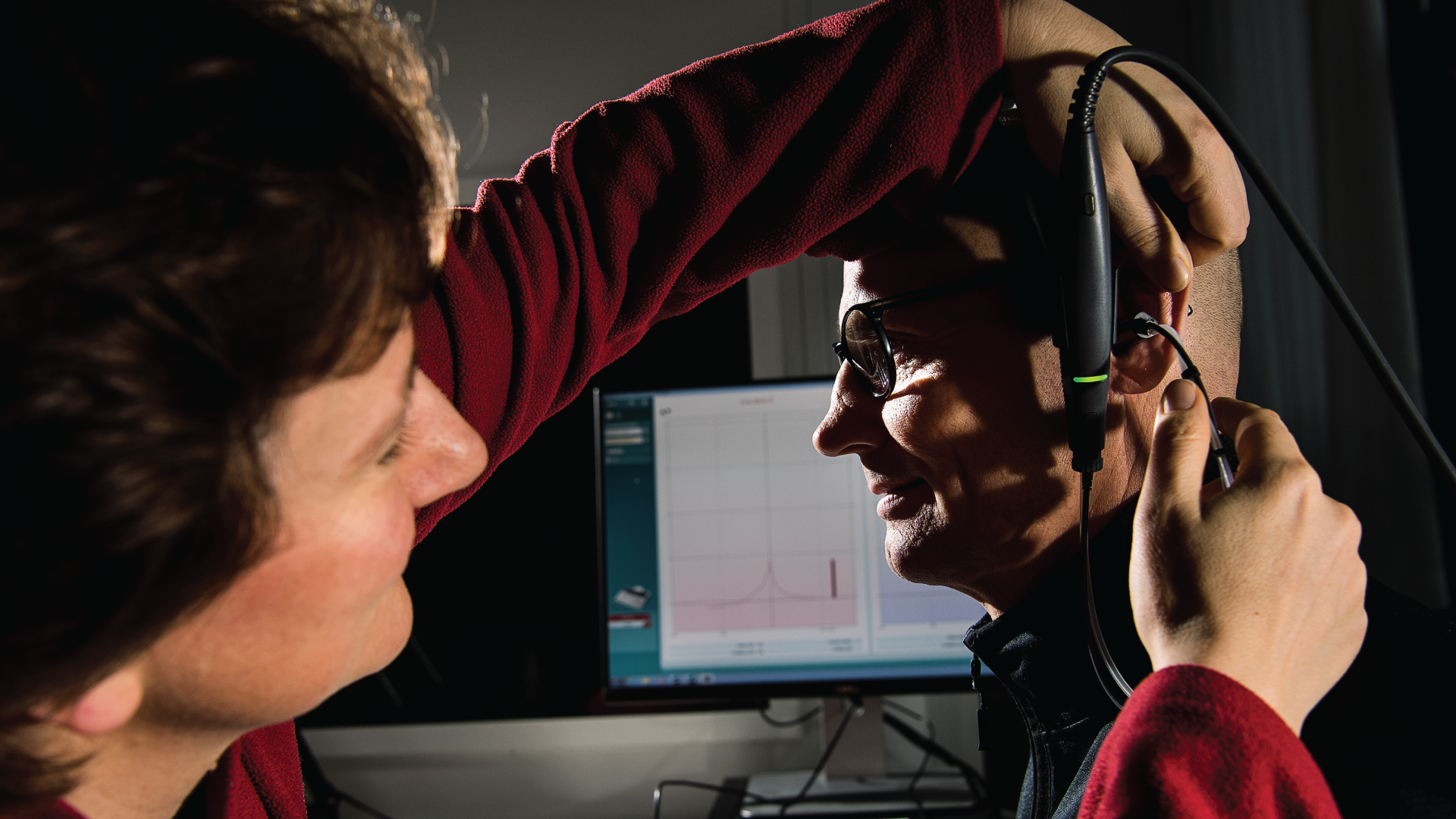

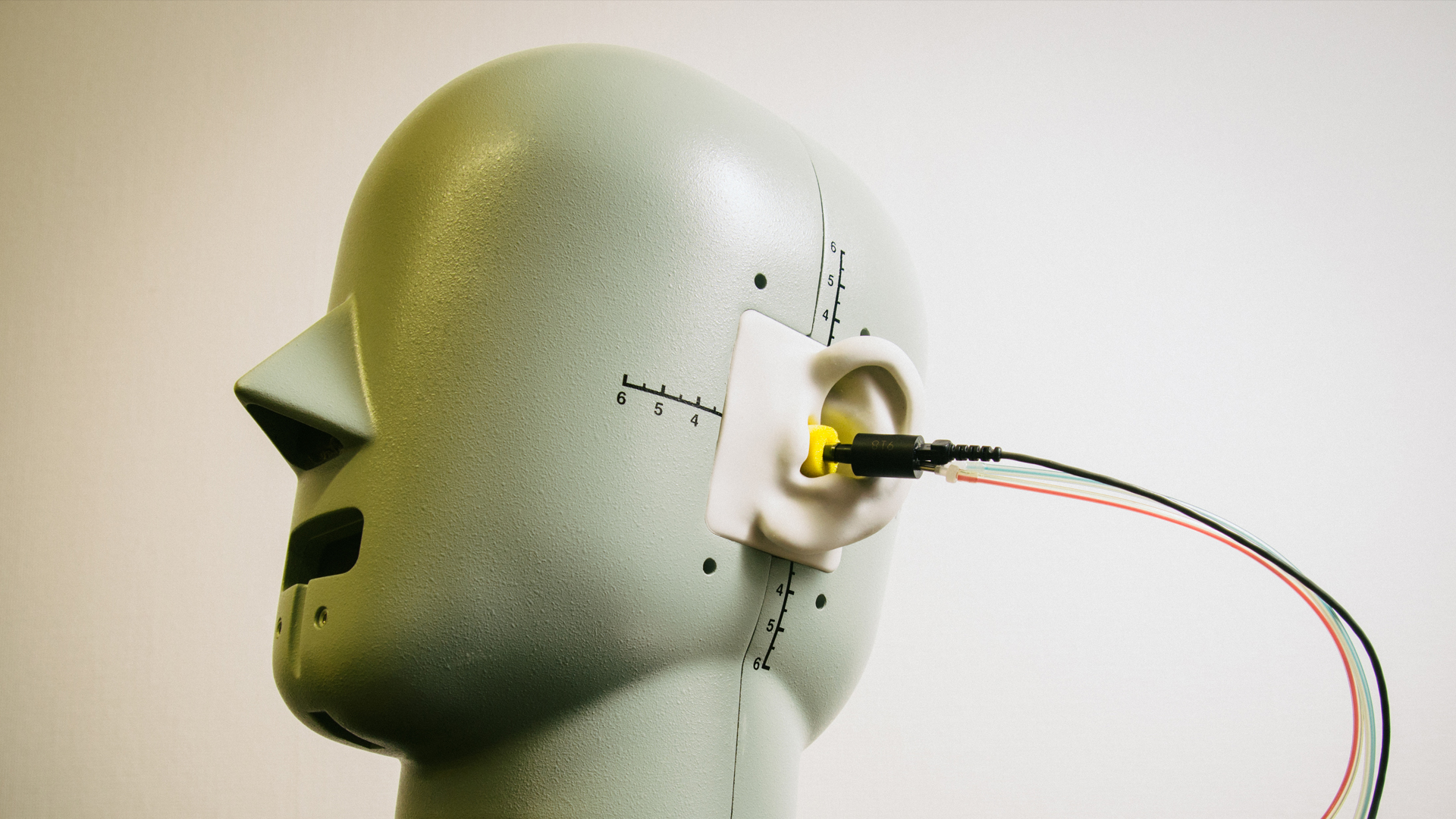

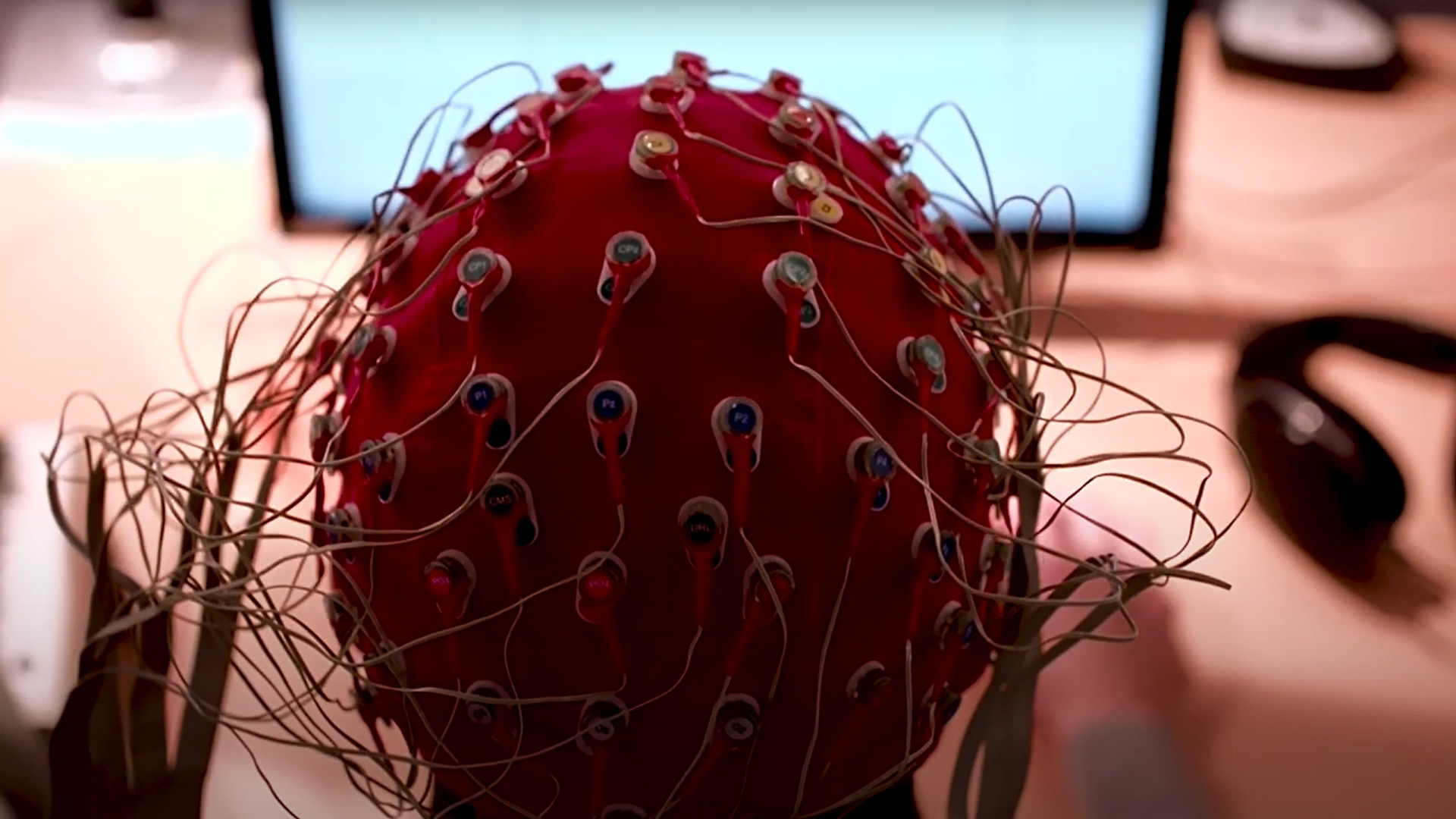

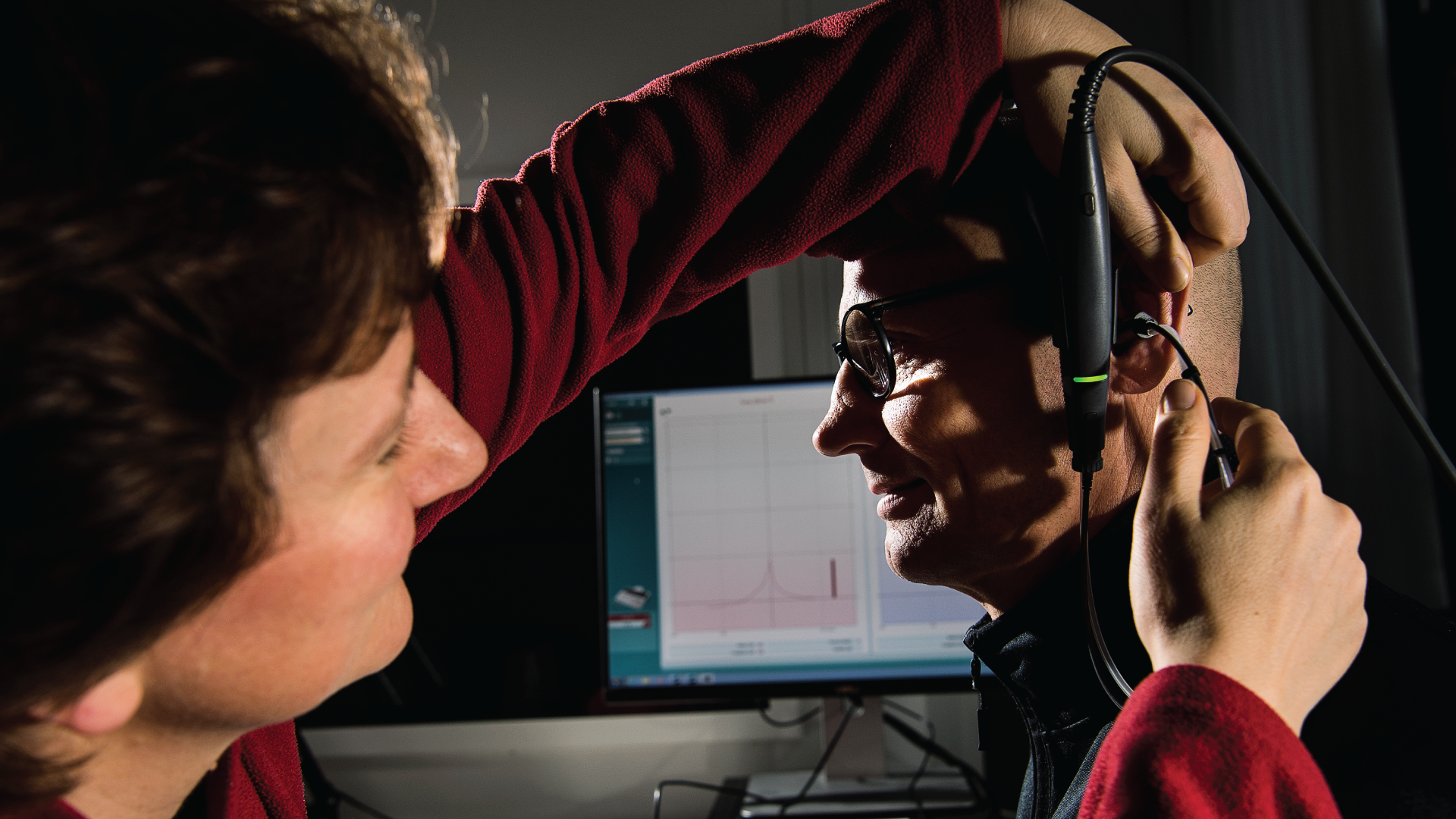

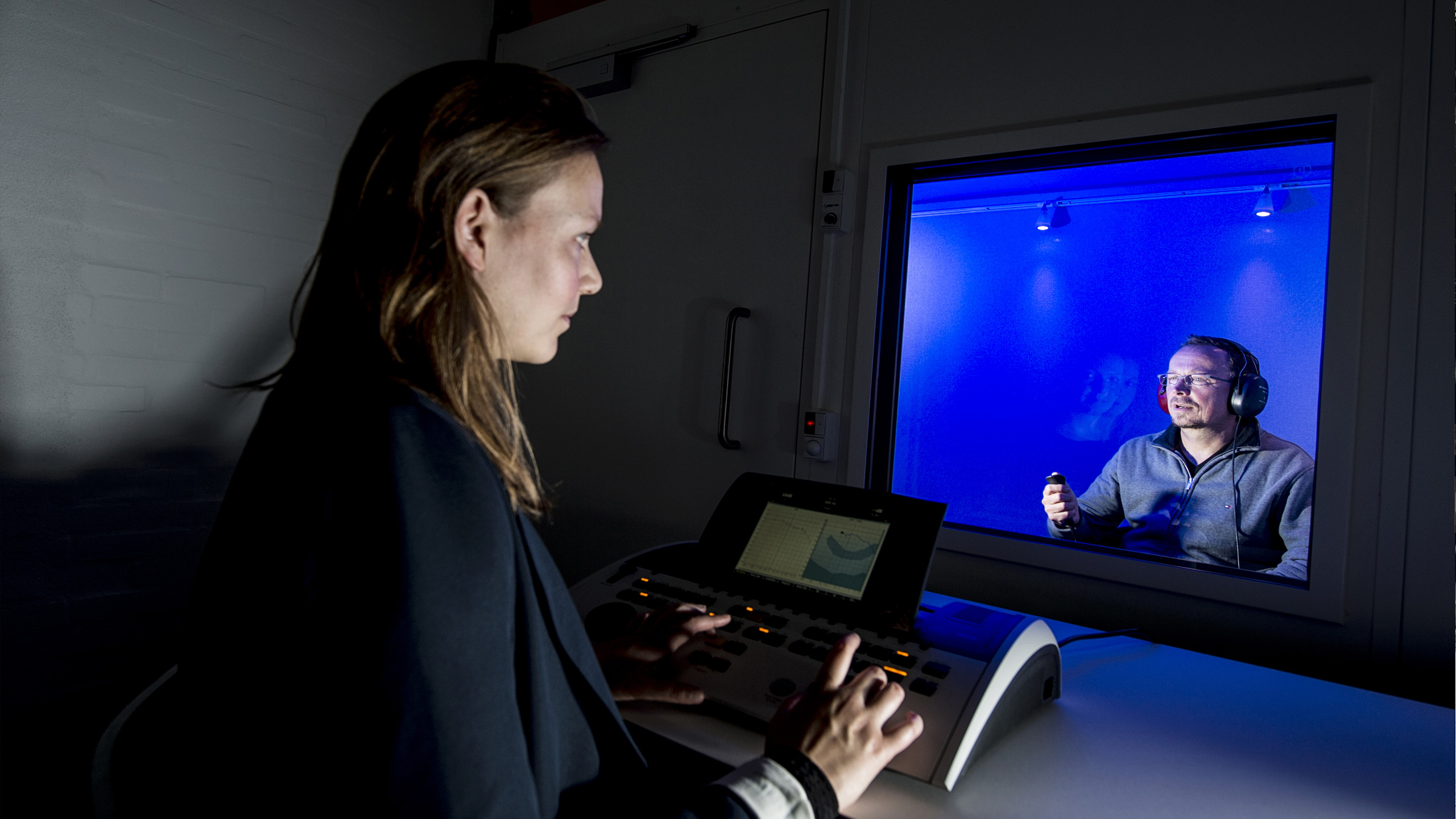

We have exciting lab facilities, including our Audiovisual Immersion Lab (AVIL), a physiology lab, a psychoacoustics lab, and two communication Labs. The tools and facilities used for research and teaching include acoustically and electrically shielded testing booths, anechoic chambers, EEG and functional near-infrared spectroscopy (fNIRS) recording systems, an otoacoustic emission recording system, an audiological clinic, a virtual auditory environment, an eye-tracking system and a real-time hearing-aid signal processing research platform.

If you wish to take part in our research as a collaborator, student or test participant, then please don’t hesitate to contact us.

Research Groups

Research Facilities

Physiology lab

An electrophysiology lab with two eletrically and acoustically shielded booths for electroencephalography and otoacoustic emissions measurements

Audiovisual Immersion Lab (AVIL)

AVIL is a virtual environment for hearing research and enables a realistic reproduction of the acoustics of real rooms, and the playback of spatial audio recordings.

Conversation lab

The Conversation Lab is designed for experiments involving multiple participants. In this lab, we recreate real-life conversations in order to investigate how interactive communication behavior shapes our capacity to communicate successfully with each other.

Psychoacoustics Lab

A psycoacoustics lab with four acoustically shielded listening booths used for audiometry, psychoacoustic and speech intelligibility experiments where sound is typically presented through headphones

Communication lab

The communications lab is used to investigate communication between two participants in order to learn how hearing loss or noisy environments effect conversations.

Clinic

An audiological clinic with equipment for audiometry, ear analysis, otoscopic inspection, and hearing aid measurements.

Lily Cassandra Paulick PhD student Department of Health Technology lpau@dtu.dk

Computational modelling of the perceptual consequences of individual hearing loss

Lily investigates the perceptual consequences of hearing loss using a computational modelling framework. While recent modeling studies have been reasonably successful in term of predicting data from normal-hearing listeners, such approaches have failed to accurately predict the consequences of individual hearing loss even when well-known impairment factors have been accounted for in the auditory processing assumed in the model. While certain trends reflecting the ‘average’ behavior in the data of hearing-impaired listener can be reproduced, the large variability observed across listeners cannot yet be accounted for.

The hypothesis of the study is that, based on recent insights from data-driven ‘auditory profiling’ studies, computational modelling enables the prediction of perceptual outcome measures in different sub populations (i.e. different auditory profiles), such that their distinct patterns in the data can be accounted for quantitatively and related to different impairment factors. More realistic simulations of the signal processing in the inner ear, the cochlea, will be integrated in the modelling. Most hearing losses are ‘sensorineural’ and have their origin in the cochlea and sensorineural hearing loss has recently been demonstrated to also induce deficits at later stages of auditory processing in the brainstem and central brain.

Jonathan Regev Postdoc Department of Health Technology joreg@dtu.dk

Characterizing the effects of age and hearing loss on temporal processing and perception

Sensitivity to temporal cues in sound is a central aspect of the auditory system’s function, playing an important role in daily communication. Both age and hearing loss have been shown to affect supra-threshold temporal processing and perception. However, the effects are often confounded and recent studies have suggested that age and hearing loss may have separate, opposite effects on supra-threshold temporal envelope processing. This project aims to disentangle the effects of age and hearing loss on temporal processing by characterizing them separately, using computational auditory models as an explorative tool.

Colin Quentin Barbier PhD Student Department of Health Technology Mobile: +33674706994 cquba@dtu.dk

Characterizing the effects of compression and reverberation on spatial hearing for cochlear implant users

In normal hearing, localization and spatialization functions rely on both monaural and binaural cues. As very strong compression schemes are used in signal treatment in cochlear implants, it is unclear how these compressions affect the synchronization of sound signals in between ears, and therefore the binaural cues accessible for the user. Furthermore, clinicians traditionally tune cochlear implants independently one ear after another as no binaural fitting guidelines exist to maximize binaural benefits.

In this project we will assess the influence of compressions on binaural cues for bilateral cochlear implant users in realistic environments (more or less reverberant) with the objective to come up with best practice guidelines for clinicians to preserve as much as possible these binaural cues.Lisbeth Birkelund Simonsen PhD student Department of Health Technology libisi@dtu.dk

New applications and test modalities for the Audible Contrast Threshold (ACT) test

This industrial PhD project is conducted in collaboration with Interacoustics A/S and Oticon A/S with the aim to improve individualisation of hearing-aid fitting and rehabilitation by diagnostic measures. The clinically focused and fast ACT test is based on the principles of the Spectro Temporal Modulation (STM) detection, which is a psychophysical (behavioural) and language-independent listening test with high correlations to aided speech-in-noise performance.

This PhD project will firstly explore electrophysiological response types to ACT stimulus paradigms, and secondly investigate how the ACT test in various forms can relate to standard speech audiometry. Furthermore, ACT as a potential measure of longitudinal hearing-aid benefit will be investigated.

Philippe Gonzalez Student Students s181758@student.dtu.dk

Binaural speech enhancement in noisy and reverberant environments using deep learning

A major challenge of hearing-impaired listeners is to focus on a specific target source in noisy and reverberant environments. Thus, developing technical solutions that can improve the quality and intelligibility of speech in such adverse acoustic conditions is of fundamental importance for a wide range of applications, such as mobile communication systems and hearing aids.

The recent success of deep neural networks (DNNs) has substantially elevated the performance of machine learning-based approaches in different fields, including automatic speaker identification, object recognition and enhancement of speech in adverse acoustic conditions. Despite this success, one major challenge of machine-learning-based speech denoising and dereverberation algorithms is the limited generalization to unseen acoustic conditions. Changing the properties of the acoustic scene between the training and the testing stage of the system introduces a mismatch, which, in turn, reduces the effectiveness. Moreover, very few approaches have looked at realistic binaural acoustic scenes in which a listener is focusing on a particular target source while both interfering noise and room reverberation are present.

Compared to single-channel approaches, which often consider anechoic speech contaminated by background noise, the acoustic variability of binaural scenes is substantially higher and affected by factors such as the direction of the target and the interfering sources, the type of background noise, the source-to-receiver distance and the amount of room reverberation. The following project aims at investigating solutions for improving the generalization of learning-based binaural speech enhancement systems in noisy and reverberant environments.

Sinnet Greve Bjerge Kristensen Industrial PhD Department of Health Technology sgbkris@dtu.dk

Simultaneous electrophysiological measurements with auditory narrow-band stimuli: investigation of clinical consequences of interactions at the level of the auditory pathway

This industrial PhD project is conducted in collaboration with Interacoustics A/S and will investigate an optimized electrophysiological method for faster hearing threshold estimation in infants. Electrophysiological methods for establishing the hearing thresholds are preferably performed in natural sleep, therefore the measurement must be time efficient. This project will investigate the balance between short testing time and diagnostic value using an approach of simultaneous stimulation in several frequency bands at once. After a ‘proof of concept’ the detailed consequences and interactions in the auditory pathway will be investigated in experimental work in populations with normal hearing and hearing loss.

Sarantos Mantzagriotis Student Students s203135@student.dtu.dk

Investigating novel pulse shapes through computational modeling of the neural-electrode interface and psychophysics experiments

A major limitation in Cochlear Implantation (CI) is the spread of current excitation induced by each electrode, resulting in a reduced efficiency between the electrical pulse and auditory nerve responses. This current spreading mis-activates neuronal populations of the auditory nerve resulting in poor frequency resolution, dynamic range, and distortion of the original acoustical signal. It is hypothesized that currently used rectangular pulses, are far from optimal for maximizing information transmission within the neural-electrode interface.

In addition, a CI’s outcome is highly dependent on a patient’s anatomical characteristics such as cochlear morphology and auditory nerve fiber distribution. Current pre-surgical planning software for CI can reconstruct a patient-specific cochlear morphology based on high-resolution scans in order to identify the most optimal electrode location and depth, that minimizes current spread. However, these frameworks are still underdeveloped to identify most-optimal parameters of a CI stimulation strategy and pulse shapes.

The project aims at improving pitch discrimination and musical perception in CI by optimizing in-silico, pulse shapes and stimulation strategy parameters. Initially, it will investigate this by creating a computational framework that couples phenomenological neuronal models, based on animal experimentation, with 3D cochlear electrical conduction models. The framework will then be extended to investigate collective neuronal behavior based on population models, combining feedback from psycho-acoustical experimentation.

Ingvi Örnolfsson PhD student Department of Health Technology rinor@dtu.dk

Scene-aware compensation strategies for hearing aids in adverse conditions

Hearing aids aim to improve speech intelligibility and hearing comfort for hearing-impaired listeners. This can be achieved through a series of signal processing algorithms. Among these are beamforming and noise reduction, which improve the signal by attenuation of background noise but can produce unwanted artefacts and only provide limited improvements in noise filled environments.

Hearing aids also provide frequency- and level-dependent amplification, known as dynamic range compression. This processing step amplifies soft sounds while maintaining loud sounds at a comfortable level. In quiet conditions this works well as the softer speech sounds are amplified. However, in adverse conditions the background noise between the speech gaps and the artefacts from previous signal processing stages are amplified. As a result, speech intelligibility and hearing comfort are diminished, and spatial cues can be distorted.

This project investigates scene-aware compensation strategies in which fast-acting compression is applied to a target signal and slow-acting compression to the background signal. In this way the soft speech signal is amplified while background sounds are kept at a comfortable listening level.

Niels Overby Postdoc Department of Health Technology niov@dtu.dk

Scene-aware compensation strategies for hearing aids in adverse conditions

Hearing aids aim to improve speech intelligibility and hearing comfort for hearing-impaired listeners. This can be achieved through a series of signal processing algorithms. Among these are beamforming and noise reduction, which improve the signal by attenuation of background noise but can produce unwanted artefacts and only provide limited improvements in noise filled environments.

Hearing aids also provide frequency- and level-dependent amplification, known as dynamic range compression. This processing step amplifies soft sounds while maintaining loud sounds at a comfortable level. In quiet conditions this works well as the softer speech sounds are amplified. However, in adverse conditions the background noise between the speech gaps and the artefacts from previous signal processing stages are amplified. As a result, speech intelligibility and hearing comfort are diminished, and spatial cues can be distorted.

This project investigates scene-aware compensation strategies in which fast-acting compression is applied to a target signal and slow-acting compression to the background signal. In this way the soft speech signal is amplified while background sounds are kept at a comfortable listening level.

Valeska Slomianka PhD student Department of Health Technology vaslo@dtu.dk

Characterizing listener behaviour in complex dynamic scenes

The aim of Valeska's project is to explore and analyze listener behavior in environments with varying degrees of complexity and dynamics. Specifically, listeners will be monitored continuously using various sensors, such as motion and eye trackers, to record body and head-movement trajectories, as well as eye-gaze throughout the experimental tasks. The underlying hypothesis is that difficulties in processing and analyzing a scene will be reflected in the tracked measures and that comparing behavior across different scenes will help pinpoint which aspects of the scenes pose challenges for the listener. This, in turn, will help to differentiate listener behavior and performance depending on the auditory profile that characterize the individual listeners’ hearing loss and as a function of the scene complexity. Furthermore, this grouping and characterization will support the selection of appropriate compensation strategies tailored to the individual listener (i.e., ‘profile aware) and environment (i.e., ‘scene aware’).

Miguel Temboury Gutierrez Postdoc Department of Health Technology mtegu@dtu.dk

Computational modeling of auditory evoked potentials in the hearing-impaired system

Increasingly more patients that have been diagnosed with ‘normal-hearing’ by the standard pure-tone audiometric test, have difficulties understanding speech. Research suggests that this ‘hidden’ hearing loss is related to ageing, and has different origins and consequences from hearing-sensitivity impairment. It remains a challenge to diagnose ‘hidden’ hearing loss but also other forms of hearing impairment through non-invasive measures, that reflect the status of the neural processing throughout the auditory pathway. Auditory evoked responses measured with EEG, have a low spatial resolution which makes it hard to differentiate between a potential damage occurring in the auditory nerve due to neural degeneration versus a damage of presynaptic hair-cell activity in the cochlea.

Combining computational phenomenological and statistical models, this PhD project focuses on predicting individual auditory evoked responses and linking them to different types of hearing-impairment and age. The project is connected to the synergy project “Uncovering hidden hearing loss” (UHEAL).

Sam David Watson Student Assistant Office for Study Programmes and Student Affairs sadaw@dtu.dk

Perceptual and neural consequences of hidden hearing loss

UHEAL (‘Uncovering hidden hearing loss’) is a large scale collaborative project aiming to confirm the existence of and develop diagnostic tools for ‘hidden hearing loss’ in humans. While temporary hearing reductions caused by short-term loud noise exposure were not previously thought harmful, it is thought that lasting damage could be done to the afferent synapses connecting to the sensing hair cells; termed synaptopathy. Such consequences were observed in a landmark study in mice. This PhD project aims to aid UHEAL in evidencing synaptopathy in humans, but furthermore to scrutinise the possible neural, perceptual, and behavioural consequences this hidden hearing loss might have.

Jonatan Märcher-Rørsted Postdoc Department of Health Technology jonmarc@dtu.dk

Correlates of auditory nerve fiber loss

Our ability to communicate in social settings relies heavily on our sense of hearing. Hearing loss can greatly diminish our ability to discriminate between speech and background sounds, and can therefore be detrimental for communication in everyday life. Although diagnosis and treatment of hearing loss has progressed, there are still many aspects hearing loss which are not fully understood. Understanding how specific pathologies in the cochlea develop, and how they affect auditory processing and perception is essential for correct treatment and diagnosis.

Recent research has discovered that the neural connection between the cochlea and the brain (i.e. the auditory nerve) can be damaged, even before a loss of sensitivity emerges. While it is known that this phenomenon is caused by excessive noise exposure and aging, robust diagnostics of such peripheral neural loss are missing. This project investigates potential correlates of auditory nerve fiber loss, and its functional consequence on auditory processing in the periphery, the mid-brain and the auditory cortex using clinical audiology and electrophysiological paradigms.

Computational auditory modelling

On April 1st, 2022, Helia Relaño-Iborra started as a postdoc in computational auditory modelling within the Center for Applied Hearing Research (CAHR). The current CAHR consortium (2021-2025) focuses on behavior in complex scenes and active communication, and Helia’s research addresses these aspects from an auditory modelling perspective. The main goal of auditory models is to provide a link between clinical assessments of hearing loss and psychoacoustic measurements, and in Helia’s work there will be a specific focus on speech intelligibility outcomes.

Helia’s research interest focus on the mechanisms that underlie human’s abilities to understand speech in adverse conditions, and to do so she has explored in her previous research work aspects of basic auditory processing as well as cognitive processes such as listening effort. In this current postdoc project she focuses on the prediction of individual listener’s behaviour by extending and validating state-of-the-art computational auditory models.

Maaike Charlotte Van Eeckhoutte Assistant Professor Department of Health Technology mcvee@dtu.dk

Audiology and Hearing Rehabilitation

On January 6th, Maaike Van Eeckhoutte started as a postdoc in Audiology and Hearing Rehabilitation. It is a new joint position in the Hearing Systems section at the Department of Health Technology at DTU and in the Department of Otorhinolaryngology, Head and Neck Surgery & Audiology at Rigshospitalet. A major goal is to facilitate the translation of research between the technical university and the clinic. The combination of technical and clinical aspects was exactly what attracted her and made her decide to move to Denmark. While having a background in clinical audiology, she has always had a strong interest in translating fundamental sciences into applications for clinical practice. Previously, she was conducting research in audiology and neuroscience during her time as a PhD student at the KU Leuven in Belgium, and translational research at the National Centre for Audiology in Canada, where she did her first postdoc. With this shared position, she sees many possibilities to set up new exciting research focused on helping the patient.

Tobias Dorszewski PhD Student Department of Health Technology tobdor@dtu.dk

Audiovisual deep learning for cognitive hearing technology

Situations involving multiple simultaneous conversations are very common in everyday life. Following speech in such noisy surroundings can be challenging particularly for hearing impaired individuals. My PhD project will hopefully bring us closer to having a wearable computing device that can help in these situations by amplifying speech from the people one wants to listen to. Such a device could benefit from multiple types of sensors, such as microphones, eye-gaze trackers, electroencephalography, or ego-centric video. Additionally, such a system would need a smart way of integrating this information which could be achieved with multi-modal AI-based approaches.

Primarily, I am interested in using ego-centric video and deep learning for determining communication context. Communication context refers to the question “who is part of a conversation?”. It goes beyond instantaneous attention and related aspects such as “who is talking to me”, which are investigated in related works. Identifying communication context requires a new approach that can integrate audio and video from a longer temporal context. As a first step, I will be collecting ego-centric video and audio from multiple people during a communication experiment with multiple conversations happening simultaneously. Then, I will explore ways of analyzing the data with audio-visual deep learning approaches

Mie Lærkegård Jørgensen Postdoc Department of Health Technology mielj@dtu.dk

Supra-threshold hearing characteristics in chronic tinnitus patients

Tinnitus is a challenging disorder which may result from different etiologies and phenotypes and lead to different comorbidities and personal responses. There are currently no standard ways to subtype the different tinnitus forms. As a result, any attempts to investigate tinnitus mechanisms and treatments have been challenged by the fact that the tinnitus population under study is not homogeneous. We suggest that subgrouping tinnitus patients based on a thorough examination of their hearing abilities offers the opportunity to investigate and treat more homogeneous groups of patients. This project will provide new knowledge about supra-threshold hearing abilities, such as loudness perception, binaural, spectral and temporal resolution in tinnitus patients that can help us gain a better understanding of the hearing deficits in the patients and how these are related to the tinnitus related distress. In fact, the vast majority of people suffering from tinnitus have a measurable hearing loss. However, hearing aid (HA) treatment is currently not successful for all tinnitus patients. These large individual differences with respect to the effect of HA treatment suggest a potential that more personalized HA fittings can lead to more successful tinnitus treatments. Here we propose that HA fittings can be personalized to tinnitus patients based on thorough examinations of patients’ hearing abilities. If successful, the knowledge obtained from this study could translate into more effective interventions for tinnitus patients, allowing more individualized and targeted treatments.

Axel Ahrens Assistant Professor Department of Health Technology aahr@dtu.dk

Speech communication challenges due to reverberation and hearing loss: Perceptual mechanisms and hearing-aid solutions

Hearing-impaired listeners are known to struggle to understand speech particularly in rooms with reverberation. The aim of this project is to understand the perceptual mechanisms of speech communication in listeners with hearing loss, particularly in the presence of reverberation. Furthermore, the influence of hearing aids and algorithms will be investigated.

Collaboration

Hearing Systems participate in a number of national and international research project.

Assessment of Listening-related Fatigue in Daily-Life (ALFi) project

Conversing with family and friends is difficult for people with hearing loss. The brain has to compensate for the hearing loss and work harder in order to understand speech in busy environments of everyday life, and this is effortful, stressful, and tiring. While well-fitted hearing aids have been shown to improve speech intelligibility and reduce some of the physiological signs of listening effort, they do not necessarily reduce listening-related fatigue, which remains a significant problem for hearing-aid users.

The ALFi project proposes an innovative hybrid approach in which field and laboratory studies are initially run in parallel using a common experimental framework to determine behavioral and physiological measures sensitive to changes in listening-related stress and fatigue. This project will advance our understanding of listening fatigue as it occurs in the real world, develop a predictive model of the experience of fatigue states, and suggest ways to mitigate fatigue in hearing-aid users.

The project will be carried out in collaboration between Hearing Systems (Torsten Dau, Dorothea Wendt, Hamish Innes-Brown), Copenhagen University (Trine Flensborg-Madsen, Naja Hulvej Rod), University of Birmingham (Matthew Apps), Eriksholm Research Centre (Jeppe Høy Konvalinka Christensen, Dorothea Wendt, Hamish Innes-Brown, Ingrid Johnsrude) and University of Western Ontario (Ingrid Johnsrude) and has been supported by the William Demant Foundation.

Living and working in Denmark

Head of Section:

Torsten Dau Head of Sections, Professor Department of Health Technology Phone: +45 45253977 tdau@dtu.dk